Consistency

- Consistency (or precision) is the ability of an instrument in measuring a quantity in a consistent manner with only a small relative deviation between readings.

- The consistency of a reading can be indicated by its relative deviation.

- The relative deviation is the percentage of mean deviation for a set of measurements and it is defined by the following formula:

![]()

Accuracy

- The accuracy of a measurement is the approximation of the measurement to the actual value for a certain quantity of Physics.

- The measurement is more accurate if its number of significant figures increases.

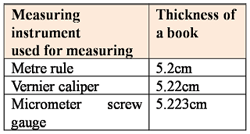

- Table above shows that the micrometer screw gauge is more accurate than the other measuring instruments.

- The accuracy of a measurement can be increased by

- taking a number of repeat readings to calculate the mean value of the reading.

- avoiding the end errors or zero errors.

- taking into account the zero and parallax errors.

- using more sensitive equipment such as a vernier caliper to replace a ruler.

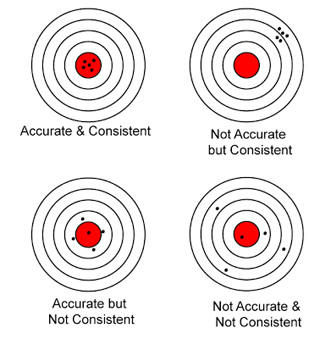

- The difference between precision and accuracy can be shown by the spread of shooting of a target (as shown in Diagram below).

Sensitivity

- The sensitivity of an instrument is its ability to detect small changes in the quantity that is being measured.

- Thus, a sensitive instrument can quickly detect a small change in measurement.

- Measuring instruments that have smaller scale parts are more sensitive.

- Sensitive instruments need not necessarily be accurate.